Adobe Unveils Game-Changing Tool to Shield Artists' Work from AI Exploitation

2024-10-08

Author: Noah

In a groundbreaking move for the creative community, Adobe has announced an expansion of its Content Credentials technology with a new tool aimed at safeguarding artists' work from the clutches of generative AI. This innovative web app, set to launch in public beta in early 2025, will empower creators to efficiently apply identification information to their digital content—be it images, videos, or audio—ensuring they receive proper credit and can opt out of AI training models.

The Content Authenticity web app works as a centralized hub for Adobe's existing Content Credentials, which create tamper-resistant metadata embedded in digital creations. This metadata reveals the ownership and creation details of the content, along with information on any AI tools used in its making. Unlike before, where artists had to individually submit requests for content protection, this new tool allows them to opt out of AI training en masse, significantly improving the process for creators.

This web app is designed to serve a wide range of users, not just those using Adobe products. It facilitates the easy attribution of content with creator names, websites, social media links, and more. Moreover, it aligns with Adobe’s Firefly AI models and integrates with popular Creative Cloud applications like Photoshop and Lightroom.

One notable feature of the Content Authenticity web app is the ability to set preferences for generative AI usage, effectively shielding artists' work from unauthorized training by AI models. While Adobe's own AI models are trained solely on licensed or public domain content, this protection can potentially extend to other companies willing to support the initiative. Currently, only Spawning—a startup known for its “Have I Been Trained?” tool—has committed to backing this feature.

Adobe claims that the links to Content Credentials will be difficult to erase, employing a suite of technologies including digital fingerprinting, invisible watermarking, and cryptographic metadata. This means that even if someone takes a screenshot of a protected piece of work, the attribution details can be restored, making it significantly more challenging for bad actors to exploit creative content.

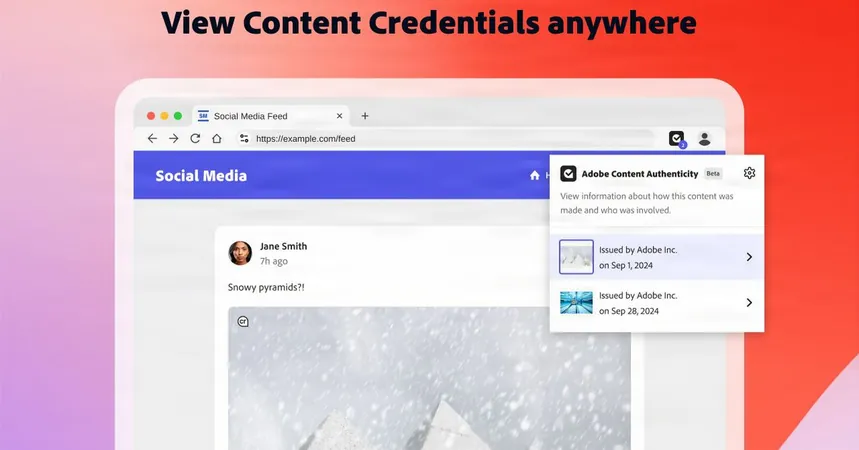

In a bid to further enhance transparency, the web app will include an inspection tool for verifying the presence of Content Credentials on external websites, similar to Meta’s “AI Info” tags. Additionally, a Content Authenticity extension for Google Chrome will be available in beta, allowing users to directly inspect content on various webpages.

The introduction of this tool comes at a time when Adobe is seeking to mend its relationship with the creative community. With a growing concern over subscription-based pricing models and general misunderstandings surrounding Adobe’s use of generative AI, the new capabilities of the Content Credentials system aim to address these criticisms by prioritizing accessibility and longevity for artists.

However, a critical concern remains: the Content Authenticity Initiative is voluntary, meaning its effectiveness hinges on adoption by a broad spectrum of tech and AI companies. Although more than 3,700 organizations have aligned with Adobe's initiative to date, the challenge of convincing major players in the AI landscape to adopt these standards could determine the ultimate success of this innovative tool.

With Adobe's ongoing commitment to industry-wide adoption of this technology, creators everywhere may finally have a fighting chance against the unchecked use of their work by generative AI. As we approach the beta launch, the creative community is hopeful that this initiative could restore their faith in Adobe and provide a much-needed shield against unauthorized content exploitation.

Brasil (PT)

Brasil (PT)

Canada (EN)

Canada (EN)

Chile (ES)

Chile (ES)

España (ES)

España (ES)

France (FR)

France (FR)

Hong Kong (EN)

Hong Kong (EN)

Italia (IT)

Italia (IT)

日本 (JA)

日本 (JA)

Magyarország (HU)

Magyarország (HU)

Norge (NO)

Norge (NO)

Polska (PL)

Polska (PL)

Schweiz (DE)

Schweiz (DE)

Singapore (EN)

Singapore (EN)

Sverige (SV)

Sverige (SV)

Suomi (FI)

Suomi (FI)

Türkiye (TR)

Türkiye (TR)