Meta Unleashes Byte Latent Transformer: A Game-Changing LLM with Enhanced Scalability

2025-01-07

Author: Emily

Introduction

Meta has officially open-sourced its revolutionary Byte Latent Transformer (BLT), a cutting-edge language model architecture that introduces a novel approach for processing language inputs by utilizing a learned dynamic scheme based on bytes, rather than the traditional tokenization methods. This innovation allows BLT models to rival the performance of Llama 3 while requiring 50% fewer inference Floating Point Operations Per Second (FLOPS), making it an exciting development in the realm of large language models (LLMs).

The Strawberry Problem and BLT's Solution

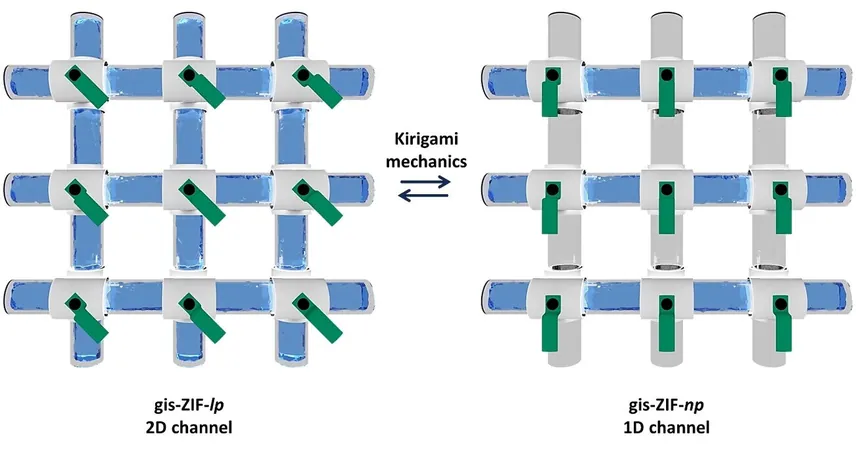

The typical workflow for most LLMs involves converting text into a fixed array of tokens. This method has its pitfalls, one of which is the so-called “strawberry problem,” where models struggle with identifying and counting letters in words. In a stark contrast, BLT dynamically groups bytes into smaller patches, which helps the model effectively predict character sequences. By employing a compact language model to analyze the likelihoods of subsequent bytes, BLT initiates a new patch whenever the computed entropy rises, essentially predicting the end of a word. This approach not only simplifies certain tasks but also enhances the model’s performance in dealing with noisy inputs, including misspellings and other errors.

Scalability and Adaptability of BLT

One of the standout features of BLT is its ability to increase both the model and patch sizes concurrently within a fixed inference budget, providing significant scalability and adaptability. As practical computing environments often encounter variable resources, BLT offers a strategic advantage. The architecture also shines when addressing the complexities presented by a variety of data types, leading to improved performance in managing long-tail data – data that is rarely encountered but critical for tasks like low-resource language translation.

Performance Comparison with Llama 3

Meta's comprehensive experiments have demonstrated that BLT outperformed Llama 3 in various character-level tasks, especially those involving noisy inputs. However, it’s worth noting that attempts to convert an existing Llama 3 model into BLT led to a "significant" drop in performance across multiple benchmarks, highlighting the importance of end-to-end training for optimal results.

Community Response and Future Prospects

Discussions on platforms like Reddit reflect excitement around BLT’s potential to tackle long-standing issues in LLMs. Users have pointed out its promise for improving multimodal applications, as it can seamlessly represent any type of data in bytes – an advantage in an increasingly diverse data landscape. Nevertheless, challenges persist, particularly concerning the memory and computational demands when handling large contexts (for instance, representing 2MB of data would necessitate a context size of 2 million bytes), emphasizing the need for further advancements in hardware capabilities.

Conclusion

In conclusion, Meta's introduction of the Byte Latent Transformer could herald a new era in scalable and efficient language modeling. With its innovative approach to processing language through bytes, BLT not only seeks to overcome the limitations of traditional tokenization but also unlocks new possibilities for the future of AI-driven text comprehension and generation. Will BLT redefine the landscape of LLMs as we know it? Only time will tell!

Brasil (PT)

Brasil (PT)

Canada (EN)

Canada (EN)

Chile (ES)

Chile (ES)

Česko (CS)

Česko (CS)

대한민국 (KO)

대한민국 (KO)

España (ES)

España (ES)

France (FR)

France (FR)

Hong Kong (EN)

Hong Kong (EN)

Italia (IT)

Italia (IT)

日本 (JA)

日本 (JA)

Magyarország (HU)

Magyarország (HU)

Norge (NO)

Norge (NO)

Polska (PL)

Polska (PL)

Schweiz (DE)

Schweiz (DE)

Singapore (EN)

Singapore (EN)

Sverige (SV)

Sverige (SV)

Suomi (FI)

Suomi (FI)

Türkiye (TR)

Türkiye (TR)