NVIDIA Unleashes Powerhouse Solutions: Introducing the GB200 NVL4 with Quad Blackwell GPUs & Dual Grace CPUs, Plus the H200 NVL Now Available!

2024-11-18

Author: Chun

A New Era of AI Acceleration

At the SC24 event, NVIDIA has made waves by introducing two formidable hardware platforms designed specifically for enterprise servers. These platforms, one operating on the established Hopper stack and another on the latest Blackwell architecture, are set to transform accelerated HPC (high-performance computing) and AI workloads across industries.

H200 NVL: Now Ready for Action!

The spotlight first shines on the newly available NVIDIA H200 NVL. This state-of-the-art solution boasts PCIe-based Hopper cards capable of connecting up to four GPUs through an NVLINK domain. This advanced connectivity delivers an impressive seven times faster bandwidth compared to traditional PCIe solutions. NVIDIA emphasizes that the H200 NVL can seamlessly integrate into any data center, providing flexibility in server configurations optimized for hybrid HPC and AI tasks.

From a performance standpoint, the Hopper H200 NVL solution enhances capabilities with 1.5 times more HBM memory and shows remarkable boosts in LLM inference and HPC performance—1.7x and 1.3x improvements, respectively. Users can tap into an astonishing 14,592 CUDA cores across 114 streaming multiprocessors (SMs), alongside 456 tensor cores, pushing the limits with up to 3 TFLOPs of FP8 performance. The integration of 80 Gb HBM2e memory on a 5120-bit interface showcases a powerful TDP of 350 Watts.

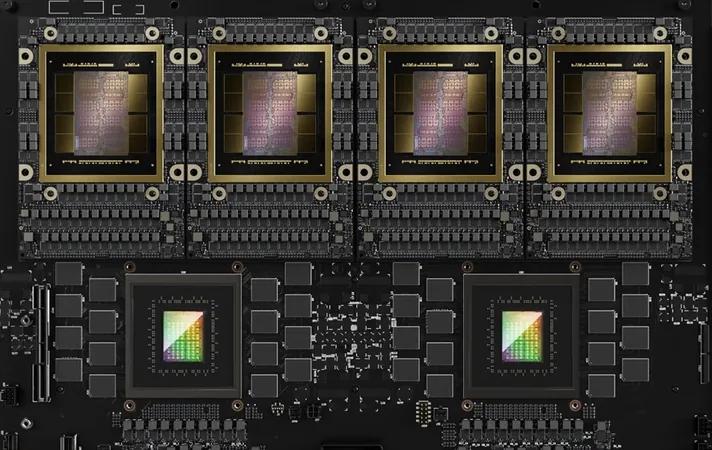

Introducing the Blackwell GB200 NVL4: A Quantum Leap in Processing Power

Next up, NVIDIA showcases the innovative GB200 NVL4, a completely new module that acts as an expanded version of the original GB200 Grace Blackwell Superchip AI solution. This module distinguishes itself by doubling both CPU and GPU capacities and significantly enhancing memory capabilities.

With two Blackwell GB200 GPUs and two Grace CPUs focused on delivering optimal synergy, the NVL4 is engineered as a single-server powerhouse, featuring a 4-GPU NVLINK domain and a staggering 1.3 terabytes of coherent memory. Users can anticipate a remarkable 2.2x improvement in simulation performance, and a 1.8x boost in both training and inference tasks. NVIDIA's partnerships are set to roll out the NVL4 solution in the upcoming months, promising groundbreaking advancements in AI.

In terms of power consumption, the Superchip module's TDP is approximately 2700W, but the more expansive GB200 NVL4 solution is expected to draw close to 6KW, illustrating NVIDIA’s relentless pursuit in propelling the AI computing segment into uncharted territory.

What Does This Mean for the Future?

NVIDIA's advancements herald a monumental shift in the capabilities of AI and HPC solutions, positioning them as indispensable tools for enterprises seeking to leverage intelligent processing at scale. As industries grapple with increasingly complex data and the demand for faster processing grows, NVIDIA’s commitment to innovation might just be the key to unlocking previously unimaginable opportunities.

Stay tuned as NVIDIA continues to reshape the landscape of computing. Could this be the turning point for AI applications everywhere? Only time will tell!

Brasil (PT)

Brasil (PT)

Canada (EN)

Canada (EN)

Chile (ES)

Chile (ES)

España (ES)

España (ES)

France (FR)

France (FR)

Hong Kong (EN)

Hong Kong (EN)

Italia (IT)

Italia (IT)

日本 (JA)

日本 (JA)

Magyarország (HU)

Magyarország (HU)

Norge (NO)

Norge (NO)

Polska (PL)

Polska (PL)

Schweiz (DE)

Schweiz (DE)

Singapore (EN)

Singapore (EN)

Sverige (SV)

Sverige (SV)

Suomi (FI)

Suomi (FI)

Türkiye (TR)

Türkiye (TR)