RSF Calls for Ban on Apple Intelligence Summary After False Report on Luigi Mangione Incident

2024-12-19

Author: Chun

Introduction

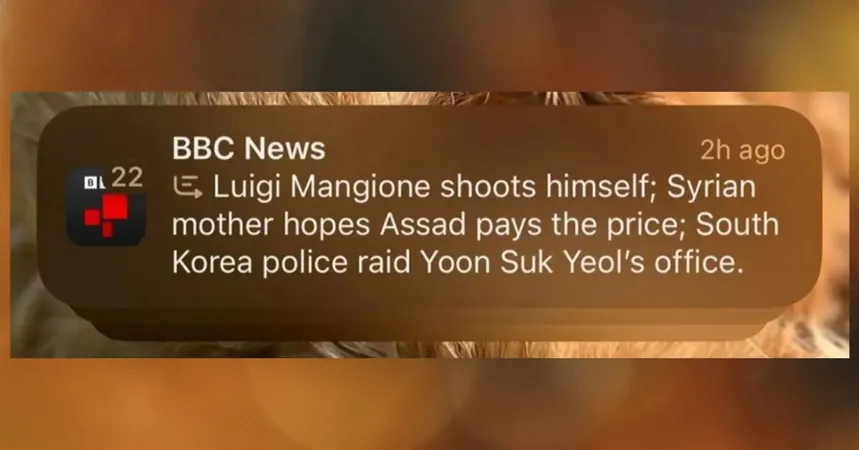

In a significant move, Reporters Sans Frontières (RSF) has called for the ban of Apple’s Intelligence summary feature following a serious error that erroneously reported that Luigi Mangione had shot himself. This incident raises alarming questions about the reliability of AI in news dissemination.

The Incident

The controversy erupted after the Apple feature incorrectly claimed that the suspect in the high-profile murder case of United Health CEO Brian Thompson had taken his own life. This blatant mistake did not go unnoticed; the BBC, considered one of the most trusted news organizations globally, filed a complaint with Apple. A spokesperson for the BBC expressed concern, emphasizing the importance of trust in journalism and the impact of misinformation on its credibility.

Response from BBC

"We have reached out to Apple to address and rectify this issue promptly," the spokesperson said. However, Apple has yet to provide a public response regarding the complaint.

Concerns Raised by RSF

RSF's call to action comes in the shadow of growing apprehension about the implications of generative AI technology on journalism and the accuracy of information. Vincent Berthier, RSF's technology lead, articulated that relying on AI technology—described as "probability machines"—for the production of factual news could result in catastrophic misinformation, undermining public trust in legitimate news sources.

The Importance of Journalistic Integrity

"In this digital age, the automated generation of false narratives attributed to reputable media outlets poses an unacceptable risk to journalistic integrity and the public's right to accurate information," Berthier stated.

Conclusion

In an age where misinformation spreads quickly, RSF underscores the potential dangers of AI tools that are not yet reliable for generating accurate news. As AI technologies continue to evolve, the industry needs to establish clear guidelines and accountability mechanisms to prevent such embarrassing errors from recurring.

This incident serves as a pivotal reminder of the pressing need for transparency and responsibility in the emerging relationship between technology and journalism—particularly as consumers rely more heavily on digital news summaries. As debate intensifies over the role of AI in media, the future of automated journalism hangs in the balance. Will major tech firms prioritize accuracy or continue to gamble with public trust? Only time will tell, but for now, the call to ban misleading AI features echoes louder than ever.

Brasil (PT)

Brasil (PT)

Canada (EN)

Canada (EN)

Chile (ES)

Chile (ES)

España (ES)

España (ES)

France (FR)

France (FR)

Hong Kong (EN)

Hong Kong (EN)

Italia (IT)

Italia (IT)

日本 (JA)

日本 (JA)

Magyarország (HU)

Magyarország (HU)

Norge (NO)

Norge (NO)

Polska (PL)

Polska (PL)

Schweiz (DE)

Schweiz (DE)

Singapore (EN)

Singapore (EN)

Sverige (SV)

Sverige (SV)

Suomi (FI)

Suomi (FI)

Türkiye (TR)

Türkiye (TR)