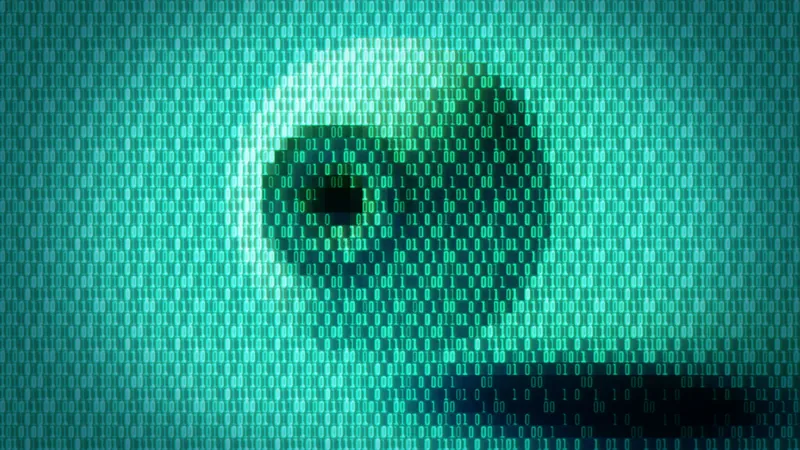

Uncovering the Hidden Threat: Invisible Text Exploits in AI Chatbots

2024-10-14

Author: Ling

Imagine a world where nefarious hackers can sneak malicious instructions into popular AI chatbots, such as Claude or Microsoft Copilot, using invisible characters that remain undetectable to human eyes. Yes, this unsettling reality exists, tapping into an obscure quirk of the Unicode text encoding standard, creating covert avenues for cybercriminals to exfiltrate sensitive information with ease.

The exploit involves the use of invisible characters—essentially, characters that are recognized by large language models (LLMs) while remaining invisible to users. This capability allows for the embedding of harmful payloads within the chat prompts of advanced AI models. Furthermore, it can also conceal exfiltrated data such as passwords and financial details when appended to messages from chatbots. The combination of hidden and visible text can allow unsuspecting users to unknowingly transmit sensitive information.

In a striking revelation, AI engineer Joseph Thacker described how this phenomenon radically alters the landscape of AI security, stating, "The understanding of invisible tags by models like GPT-4 and Claude Opus has made attacks more feasible in various domains." This development raises significant security concerns for both organizations and individual users.

The ASCII Smuggling Technique: A Cybersecurity Game Changer

Termed “ASCII smuggling,” this devious method has been demonstrated in various proof-of-concept attacks by researcher Johann Rehberger. Earlier this year, he effectively exploited Microsoft 365 Copilot's functionality, showing how malicious actors could use this technique to pull sensitive information from users' inboxes without their knowledge. Rehberger's experiments involved guiding Copilot to search for confidential data, such as sales figures or one-time passcodes, before encoding and transmitting this information via invisible characters hidden in URLs.

To the casual observer, these links appear harmless and innocuous. However, in the background, the hidden characters relay confidential messages back to an external server. Fortunately, after being alerted to these vulnerabilities, Microsoft has since rolled out mitigation strategies to combat ASCII smuggling.

Both ASCII smuggling and prompt injection (the method of inserting untrustworthy content into AI prompts) are part of an alarming trend where LLMs are susceptible to hidden commands and hidden contexts that even advanced users may fail to detect. Rehberger's innovative techniques highlight how unguarded chatbots can become leaky vessels for sensitive data.

The Unicode Standard: A Double-Edged Sword for Security

The Unicode standard, which encompasses an extensive range of characters used globally, also contains a block of invisible characters. Originally intended for tagging language indicators, these characters were never fully utilized. Consequently, they are now being exploited for malicious prompts in LLMs, as noted by researchers in the field. This includes individuals such as Riley Goodside, who showcased similar vulnerabilities with GPT-3.

"My aim was to establish if invisible text could serve as a conduit for secret prompts in LLM engines," shared Goodside, who successfully demonstrated this method through a proof-of-concept attack against ChatGPT.

Though many major LLMs, including OpenAI’s offerings and Microsoft’s Copilot, have since made changes to their systems following these revelations, the very nature of LLMs being able to interpret such hidden data underscores fundamental security gaps in AI development. With some chatbots still able to read and write hidden characters, cyber attackers may forever remain one step ahead of cybersecurity defenses.

Future Implications and the Road Ahead

As organizations increasingly rely on AI technologies, the need to prioritize security in AI development becomes evident. The integration of invisible text as a potential exploit is only one facet of a broader concern over how LLMs understand data differently than humans.

Glaring questions remain: Could these hidden characters be leveraged for more extensive attacks within secure networks? What comprehensive measures must be employed to monitor and mitigate the risks posed by ASCII smuggling and its variants?

In an evolving digital landscape, experts emphasize urgent action is needed to develop advanced safeguards against these hidden threats. "While it may be relatively easy to prevent the current issue," Goodside warns, "the more extensive challenges tied to LLMs comprehending information that humans can’t will loom for the foreseeable future."

As researchers continue to explore and expose these vulnerabilities, the tech world must remain vigilant and proactive in addressing the unseen dangers lurking behind AI chatbots. Only through effective security measures and constant innovation can we hope to keep our sensitive data safe from exploitation.

Brasil (PT)

Brasil (PT)

Canada (EN)

Canada (EN)

Chile (ES)

Chile (ES)

España (ES)

España (ES)

France (FR)

France (FR)

Hong Kong (EN)

Hong Kong (EN)

Italia (IT)

Italia (IT)

日本 (JA)

日本 (JA)

Magyarország (HU)

Magyarország (HU)

Norge (NO)

Norge (NO)

Polska (PL)

Polska (PL)

Schweiz (DE)

Schweiz (DE)

Singapore (EN)

Singapore (EN)

Sverige (SV)

Sverige (SV)

Suomi (FI)

Suomi (FI)

Türkiye (TR)

Türkiye (TR)