Revolutionary AI Mimics Human Sounds with Unprecedented Accuracy

2025-01-09

Author: Wei

Introduction

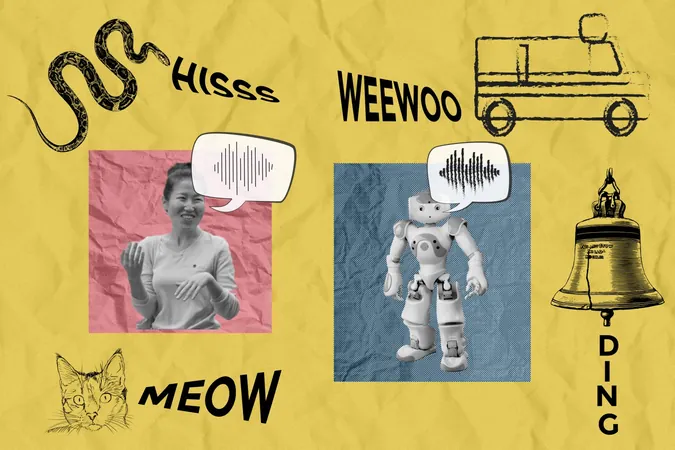

Have you ever noticed how imitating sounds can turn an ordinary conversation into a vivid storytelling session? Whether it’s mimicking the roar of a faulty car engine or the gentle purring of a neighbor's cat, our innate ability to imitate can enhance communication when words fall short. But what if artificial intelligence could master this art?

The Breakthrough at MIT

Researchers at the Massachusetts Institute of Technology's Computer Science and Artificial Intelligence Laboratory (CSAIL) have unveiled a groundbreaking AI system that can produce remarkably human-like vocal imitations without prior training or exposure to human vocalizations. This incredible innovation is set to redefine how machines interact with us and understand the world around them.

How It Works

The scientists constructed a model of the human vocal tract that accurately simulates how sounds are produced by manipulating various components like the throat, tongue, and lips. Using a cognitively-inspired AI algorithm, the system learns how to imitate diverse sounds—ranging from the soft rustle of leaves to the blaring of an ambulance siren—by perceiving and interpreting auditory cues just like we do.

Potential Applications

This technology has far-reaching potential applications. Imagine sound designers using these imitations to create more immersive experiences in films or virtual reality environments. Educators could harness this system to help students learn new languages more intuitively by allowing them to hear accurate vocalizations of words and phrases.

Experimental Findings

In exciting experimental findings, human judges rated the AI's creations favorably 25% of the time, with some imitations, such as that of a motorboat, winning approval from participants by as much as 75%. This could prove pivotal in various fields, from arts to linguistics, as creators look for tools that can articulate complex soundscapes using simple vocal expressions.

Insights from Researchers

Kartik Chandra, one of the lead researchers, notes, "The essence of auditory imitation goes beyond mere sound replication; it delves into how we perceive auditory experiences." His insights highlight the potential shift in tools available to artists and creators. Imagine artists being able to convey sounds without needing specialized software or extensive sound libraries — just their voice to generate AI-based outputs.

Broader Implications

Even more intriguingly, the research raises profound questions about language development and communication. Professor Robert Hawkins from Stanford University, who was not involved in the research, affirms that the model represents a significant step in understanding the evolution of language and sound representation. He states, “This could illuminate the nuanced relationship between physical sound production and social dynamics in shaping language.”

Challenges Ahead

Despite the promising advancements, the researchers recognize there are challenges ahead. Their model currently struggles with certain sounds and cannot yet replicate how different cultures interpret sounds uniquely, showcasing the complexity of language as a multifaceted communication tool.

Conclusion

As the team at MIT continues to refine their model, we are left to ponder the implications of this technology. Will it transform language learning? Could it lead to AI companions that understand us not just through words but through sounds, emotion, and expression? One thing is for sure—this revolutionary leap in AI communication opens exciting frontiers in our journey to connect with machines like never before!

Brasil (PT)

Brasil (PT)

Canada (EN)

Canada (EN)

Chile (ES)

Chile (ES)

Česko (CS)

Česko (CS)

대한민국 (KO)

대한민국 (KO)

España (ES)

España (ES)

France (FR)

France (FR)

Hong Kong (EN)

Hong Kong (EN)

Italia (IT)

Italia (IT)

日本 (JA)

日本 (JA)

Magyarország (HU)

Magyarország (HU)

Norge (NO)

Norge (NO)

Polska (PL)

Polska (PL)

Schweiz (DE)

Schweiz (DE)

Singapore (EN)

Singapore (EN)

Sverige (SV)

Sverige (SV)

Suomi (FI)

Suomi (FI)

Türkiye (TR)

Türkiye (TR)