Revolutionary Self-Supervised Machine Learning Can Now Adapt Instantly to New Tasks Without Retraining!

2024-12-23

Author: Arjun

Introduction to Machine Learning Paradigms

The world of machine learning has often been categorized into two well-known types: “supervised” and “unsupervised” learning. Supervised learning trains algorithms on labeled datasets, where each input is carefully matched with a corresponding output, providing a clear roadmap for the algorithm. On the other hand, unsupervised learning relies solely on the raw data, compelling the algorithm to identify patterns independently, without explicit guidance.

Emergence of Self-Supervised Learning (SSL)

Emerging from these conventional paradigms is a groundbreaking approach known as “self-supervised learning” (SSL). This innovative methodology constructs labels directly from raw data, thus removing the heavy reliance on human experts to annotate datasets. Essentially, SSL transforms the learning process by enabling algorithms to deduce their own labels, significantly improving efficiency and adaptability.

Applications and Limitations of Traditional SSL

Presently, SSL is poised to revolutionize numerous applications, spanning natural language processing (NLP), computer vision, bioinformatics, and speech recognition. Traditional SSL methods generally promote the closeness of representations for semantically similar data (positive pairs) while keeping dissimilar data (negative pairs) apart. The positive pairs undergo data augmentation techniques—altering aspects like color, texture, or orientation to create variability.

Challenges of Inductive Priors in SSL

However, challenges arise from imposing strong “inductive priors”—necessary assumptions about the dataset’s features—that can hinder adaptability across diverse tasks. Responding to these challenges, researchers from MIT's Computer Science and Artificial Intelligence Laboratory (CSAIL) and the Technical University of Munich have introduced a pioneering technique called "Contextual Self-Supervised Learning" (ContextSSL).

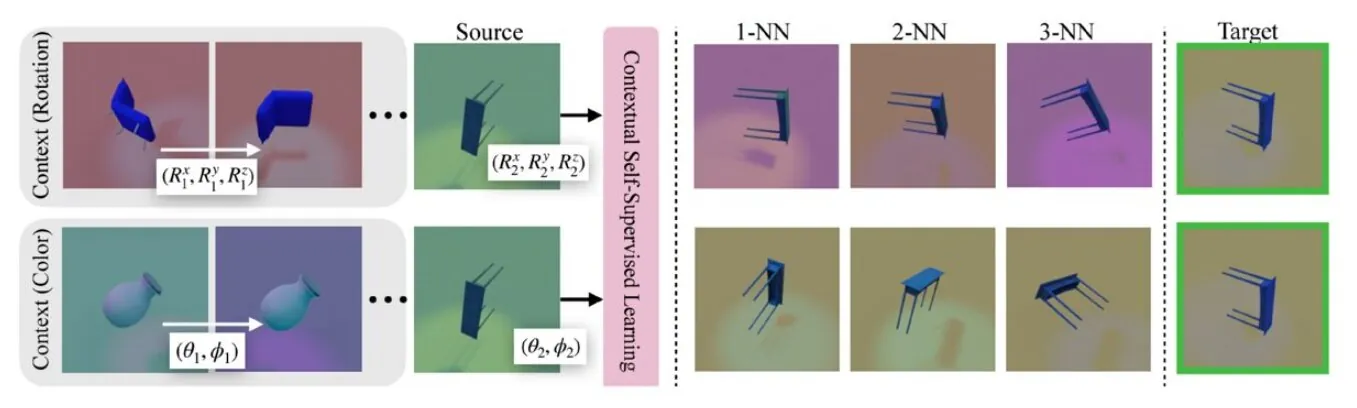

ContextSSL: A Revolutionary Approach

This novel approach transcends the limitations of pre-defined data augmentations. ContextSSL leverages an understanding of context, reminiscent of world models which encapsulate an agent's environment—its dynamics and structures. By integrating this contextual awareness, the new method creates representations that are inherently more adaptable to various tasks and transformations, eliminating the need for repetitive retraining.

Technical Innovation Behind ContextSSL

The researchers employed a transformer module to encode contextual information as sequences of state-action-next-state triplets, reflecting prior experiences with various transformations. This mechanism allows the model to selectively apply invariance or equivariance based on the defined context, providing a skilled response to the nature of the task at hand.

Expert Perspectives on ContextSSL

"One of our primary objectives is to create representations that become increasingly sensitive to the transformation group as context is expanded," explains CSAIL Ph.D. student Sharut Gupta. "We are moving away from the need to adjust models for each scenario, aiming instead for a versatile model that can react to environments in a manner akin to human adaptability."

Empirical Validation and Performance Metrics

The efficiency of ContextSSL has been experimentally validated, showcasing substantial performance improvements on key computer vision benchmarks—including 3DIEBench and CIFAR-10—requiring nuanced adjustments for both invariance and equivariance. For instance, in analyzing the MIMIC-III dataset, which holds vital medical information, ContextSSL demonstrated its capability to respect gender-specific requirements in treatment predictions while ensuring fairness in evaluating outcomes.

Conclusion and Future Implications

Dilip Krishnan, a Senior Staff Research Scientist at Google DeepMind, commended the work for pushing the boundaries of what self-supervised learning can achieve. "Rather than pre-determining the properties of invariance or equivariance, this study presents a smarter way to approach these features based on the task's demands," he noted, emphasizing the transformative potential of this research.

In summary, ContextSSL represents a significant leap forward in self-supervised learning, promising greater efficiency, flexibility, and performance across a wide array of fields. As this innovative technology continues to develop, it could redefine the future of machine learning, making systems smarter and more intuitive than ever before.

Brasil (PT)

Brasil (PT)

Canada (EN)

Canada (EN)

Chile (ES)

Chile (ES)

España (ES)

España (ES)

France (FR)

France (FR)

Hong Kong (EN)

Hong Kong (EN)

Italia (IT)

Italia (IT)

日本 (JA)

日本 (JA)

Magyarország (HU)

Magyarország (HU)

Norge (NO)

Norge (NO)

Polska (PL)

Polska (PL)

Schweiz (DE)

Schweiz (DE)

Singapore (EN)

Singapore (EN)

Sverige (SV)

Sverige (SV)

Suomi (FI)

Suomi (FI)

Türkiye (TR)

Türkiye (TR)