Unveiling the Universe: Frontier Supercomputer Breaks Records in Astrophysical Simulations

2024-11-25

Author: Jacob

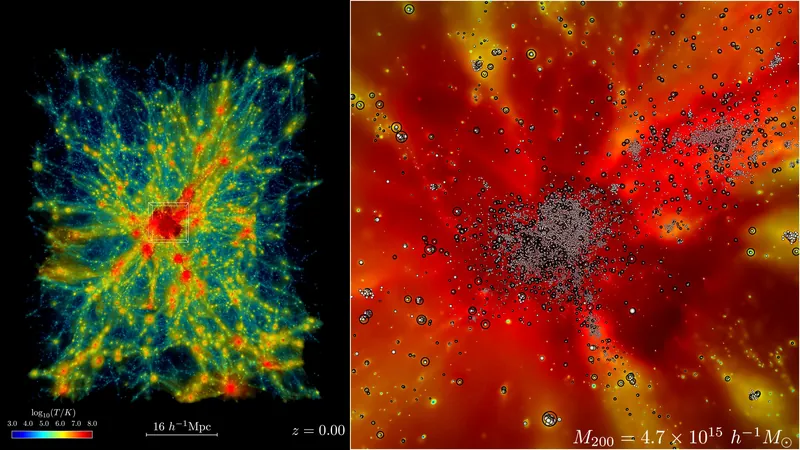

In an extraordinary leap for scientific computation, researchers at the Department of Energy's Argonne National Laboratory have harnessed the unmatched power of the Frontier supercomputer at Oak Ridge National Laboratory to achieve the largest astrophysical simulation of the universe to date. This groundbreaking simulation, completed in early November, sets a new standard for cosmological hydrodynamics simulations, pushing the boundaries of our understanding of both atomic and dark matter.

Project lead Salman Habib, the division director for Computational Sciences at Argonne, explains the dual role of dark and atomic matter in the cosmos. "To truly understand the universe's behavior, we must model both these aspects: gravity, alongside a plethora of complex physics including hot gas movements, star formation, and the genesis of black holes and galaxies. It's akin to creating an astrophysical 'kitchen sink'—a comprehensive simulation of the universe," he stated.

The project required formidable computation, significantly more intricate than mere gravity-focused simulations. Habib elaborates, “Simulating vast sections of the universe, like those analyzed by significant telescopes such as Chile's Rubin Observatory, necessitates accounting for billions of years of cosmic expansion—an undertaking that was previously deemed impractical."

The incredible feat was accomplished using the Hardware/Hybrid Accelerated Cosmology Code (HACC), a sophisticated software initially designed about 15 years ago for petascale machines. HACC has gone through extensive upgrades as part of ExaSky, a pioneering initiative within the Exascale Computing Project (ECP). This project united thousands of experts striving to innovate scientific applications and software tools to capitalize on the incoming era of exascale supercomputers, capable of executing quintillions of calculations per second.

Over the course of the last seven years, the HACC team has vastly expanded its capabilities and optimized the code for extraordinary speeds on next-generation systems powered by AMD's incredibly potent Instinct MI250X GPUs. Remarkably, HACC achieved performance nearly 300 times faster than its functionality on older supercomputers, thus enabling an unthinkable level of accuracy and detail in simulations. It utilized roughly 9,000 compute nodes on the Frontier supercomputer, now located at the Oak Ridge Leadership Computing Facility (OLCF).

Bronson Messer, OLCF's director of science, emphasized the groundbreaking nature of these simulations: “It's not just about the immense scope necessary for meaningful comparisons with modern observational surveys; it’s also about the physical realism brought into play by including baryonic matter alongside dynamic physics, solidifying this simulation as a phenomenal success for Frontier."

The remarkable achievement was the culmination of collaborative efforts from a dedicated team, which included members like Michael Buehlmann, JD Emberson, Katrin Heitmann, Patricia Larsen, Adrian Pope, Esteban Rangel, and Nicholas Frontiere—each contributing their expertise to the monumental undertaking.

Before this monumental simulation, parameter scans for HACC were initiated on the Perlmutter supercomputer housed at the National Energy Research Scientific Computing Center (NERSC) and had also seen substantial testing on the Aurora supercomputer at the Argonne Leadership Computing Facility (ALCF).

This leap in astrophysical simulation not only highlights the prowess of supercomputing technology but is also a testament to humanity's enduring quest to understand the mysteries of the universe. As we stand on the brink of an exascale computing revolution, the potential for further discoveries about the cosmos is limitless! Stay tuned, because the universe is about to be unraveled like never before!

Brasil (PT)

Brasil (PT)

Canada (EN)

Canada (EN)

Chile (ES)

Chile (ES)

España (ES)

España (ES)

France (FR)

France (FR)

Hong Kong (EN)

Hong Kong (EN)

Italia (IT)

Italia (IT)

日本 (JA)

日本 (JA)

Magyarország (HU)

Magyarország (HU)

Norge (NO)

Norge (NO)

Polska (PL)

Polska (PL)

Schweiz (DE)

Schweiz (DE)

Singapore (EN)

Singapore (EN)

Sverige (SV)

Sverige (SV)

Suomi (FI)

Suomi (FI)

Türkiye (TR)

Türkiye (TR)