New Research Reveals Blind Spots in AI Models for Wildlife Image Retrieval

2024-12-18

Author: Yu

Introduction

In a groundbreaking study, ecologists have found significant shortcomings in current computer vision models, particularly in their ability to retrieve specific wildlife images from extensive nature datasets. With North America boasting roughly 11,000 tree species and millions of photos spanning various species from butterflies to humpback whales, researchers rely on these vast collections to inform their understanding of ecological phenomena, including species behaviors, migration, and responses to climate change.

The Limitations of Current Research Methods

While these nature image datasets are a remarkable resource, they are not yet as effective as they could be for researchers. Searching through them manually is labor-intensive and inefficient. Enter automated research assistants—or multimodal vision language models (VLMs)—which are designed to process both text and images. These tools have the potential to streamline the retrieval of relevant images based on scientific queries.

Evaluating VLMs: The INQUIRE Dataset

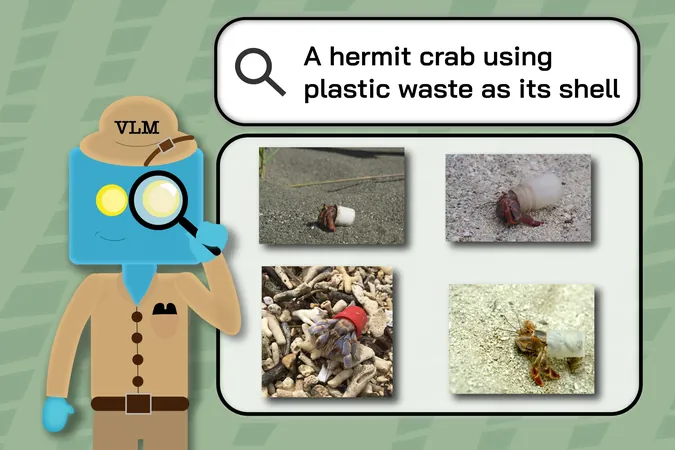

But how effective are VLMs in aiding ecological research? A collaborative team from MIT's Computer Science and Artificial Intelligence Laboratory (CSAIL), University College London, iNaturalist, University of Edinburgh, and UMass Amherst set out to test this by evaluating the VLMs with a dataset called "INQUIRE." This dataset contains 5 million wildlife images alongside 250 specific search prompts provided by ecologists.

Results of the Study

The results were telling. While larger, advanced VLMs performed adequately with straightforward inquiries—like identifying anomalies in underwater reefs—they faltered when faced with technical language or complex biological conditions. For instance, while the models successfully identified jellyfish on a beach, they struggled significantly with prompts such as “axanthism in a green frog,” reflecting the models' limitations in understanding nuanced scientific terminology.

The Need for Domain-Specific Training

Edward Vendrow, a Ph.D. student at MIT who co-led the research, emphasized the necessity of domain-specific training. “By exposing VLMs to more specialized datasets, we hope to enhance their utility in retrieving precise images that are critical for biodiversity monitoring and climate change analysis,” he stated.

Creating the INQUIRE Dataset

The INQUIRE dataset was meticulously created through collaboration with various experts, including ecologists and biologists, who provided insights into the types of searches they would conduct. Annotators invested 180 hours in curating this dataset, carefully labeling around 33,000 images that matched specific prompts—like identifying a Californian condor tagged for research purposes. Yet, the tests revealed that VLMs often retrieved irrelevant images due to their inability to fully grasp scientific vocabulary. For instance, attempts to find “redwood trees with fire scars” sometimes yielded images of unaffected trees.

Reflecting on Data Gathering

Sara Beery, an assistant professor at MIT and a co-senior author on the project, highlighted the importance of this meticulous data gathering. “Understanding the current capabilities of VLMs in accurately processing ecological data is crucial,” she remarked, noting how these findings pinpoint gaps in research, particularly concerning complex scientific queries.

Future Directions

Looking forward, the research team is working on enhancing VLMs to effectively address the challenges highlighted, including improving information retrieval techniques for intricate queries. There’s a strong indication from the findings that VLMs could eventually perform well across a range of research disciplines beyond ecology, owing to the diverse nature of the INQUIRE queries.

Expert Opinions

Experts like Justin Kitzes from the University of Pittsburgh recognized the urgency of this research, stating, “As biodiversity datasets grow, finding efficient search methods becomes increasingly vital. This research tackles a pressing challenge in uncovering comprehensive data about species characteristics and interactions.”

Conclusion

Moreover, Serge Belongie, director of the Pioneer Center for Artificial Intelligence, remarked on the duality of progress presented by the study: “While this work signifies a remarkable advancement in our grasp of multimodal models in scientific research, it also starkly highlights the ongoing challenges in executing detailed text-to-image retrieval—a task that continues to be complex and nuanced.” As scientists continue to refine these VLMs, the hope is that they will not only enhance image retrieval in ecological studies but also have a positive ripple effect across various fields that require meticulous observation and analysis.

Brasil (PT)

Brasil (PT)

Canada (EN)

Canada (EN)

Chile (ES)

Chile (ES)

Česko (CS)

Česko (CS)

대한민국 (KO)

대한민국 (KO)

España (ES)

España (ES)

France (FR)

France (FR)

Hong Kong (EN)

Hong Kong (EN)

Italia (IT)

Italia (IT)

日本 (JA)

日本 (JA)

Magyarország (HU)

Magyarország (HU)

Norge (NO)

Norge (NO)

Polska (PL)

Polska (PL)

Schweiz (DE)

Schweiz (DE)

Singapore (EN)

Singapore (EN)

Sverige (SV)

Sverige (SV)

Suomi (FI)

Suomi (FI)

Türkiye (TR)

Türkiye (TR)